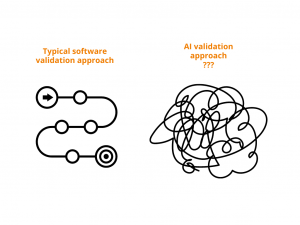

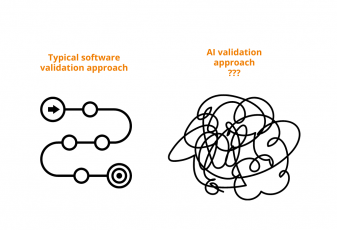

Let’s agree that in our regulated industry we take EXTRA cautious approach towards adopting new technologies. A standard validation approach of again standard computerized systems already includes

- the requirement for Installation Qualification (IQ)

- Design Qualification (DQ)

- Operational Qualification (OQ)

- and Performance Qualification (PQ)

This is for standard all-time favorites – EDC, eTMF, CTMS, etc. But what about if we add AI to this? With changing test results as it learns on more and more data? How do you validate something that may very well give you different outcome with every run?

When applying AI to the validation of GxP computerized systems, the industry faces a unique challenge: the dynamic nature of AI, particularly in data handling, defies traditional validation frameworks focused on system performance and installation. This evolving data landscape makes it difficult to guarantee results with absolute certainty. Therefore, the question remains: What aspects need validation to ensure confidence in AI-driven processes, and is it possible to achieve 100% certainty?

Spoiler alert! We know how! And we will share more in the upcoming weeks.

The traditional validation approach is well-established over the past decade, involves comprehensive documentation to ensure systems are thoroughly tested and validated.

In recent years, regulatory bodies have begun to adapt to technological advancements. The FDA has introduced guidelines for Computer Software Assurance (CSA) to implement a risk-based approach to software validation. Similarly, the International Society for Pharmaceutical Engineering (ISPE) updated its GAMP®5 guide, advocating for critical thinking and iterative methods in validation processes.

Moreover, the integration of AI in daily operations is no longer just a trend; early adopters are leveraging AI to streamline GxP-related activities.

However, the use of AI in validation and documentation processes raises significant questions about trust and reliability. Can the results produced by AI, or documents created by AI, be trusted? This dilemma suggests a need to blend traditional validation methods with innovative technologies.

The FDA’s publication on “Artificial Intelligence and Machine Learning in Software as a Medical Device” provides insights into regulatory directions for AI. In December 2023, the European Medicines Agency (EMA) released its “Artificial Intelligence Workplan”, outlining its approach to AI regulation until 2028. Despite these efforts, the absence of definitive regulations for AI in this context leaves companies navigating uncertain waters.