Integrations in Clinical Trials: An IT/ETL Perspective

August 28, 2025

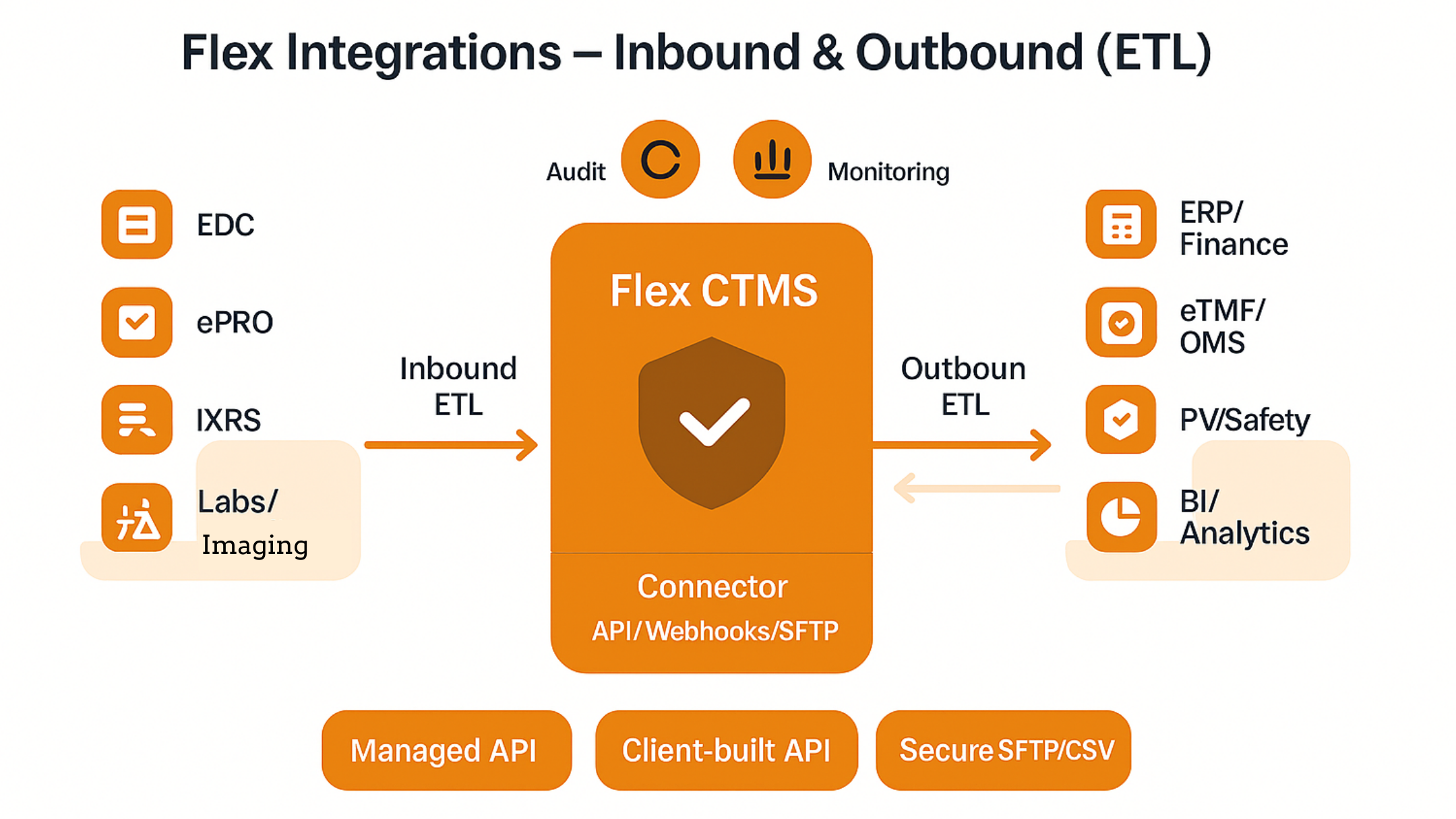

How Flex Databases connects your study ecosystem – reliably, securely, and at scale.

- Integration connects software systems so they can share data automatically, eliminating duplicate entry.

- In clinical trials, the most common pattern is EDC → CTMS, followed by CTMS → ERP/Finance and CTMS → BI/analytics.

- Flex Databases supports three delivery models: Managed API integration, Client‑built API integration, and SFTP/CSV batch ingestion.

- We design integrations using proven ETL patterns (Extract → Transform → Load), with a focus on data quality, auditability, and compliance.

- A typical project flows through scope & data mapping → connector build → study‑level configuration → UAT → go‑live, with monitoring and change control from day one.

Why integrations matter to sponsors and CROs

Integration means that data entered once in a system of record is propagated automatically wherever it is needed. For clinical operations teams this translates into:

- Time saved: less manual entry across systems.

- Higher data quality: fewer copy‑paste and transcription errors.

- Operational efficiency: CTMS dashboards and workflows reflect near real‑time updates.

- Consistency & compliance: aligned datasets across platforms support inspection readiness and controlled reporting.

Core integration patterns we see most often

1) EDC → CTMS (operational data sync)

Goal: Populate Subjects, Visits, and Adverse Events from EDC into CTMS to drive monitoring, milestones, and payments.

How it works: – Extract from EDC (API/webhooks where available). – Transform to operational semantics (site codes, subject keys, visit windows, status codes). – Load into CTMS with reliable update logic and audit trail.

Outcome: CTMS users work on current clinical data without re‑typing what already exists in EDC.

2) CTMS → ERP/Finance (payments)

Goal: Send approved visit payments/invoices from CTMS to ERP (e.g., SAP); receive status back when processed.

How it works: – CTMS publishes approved line items to ERP. – ERP returns acknowledgements and finalization signals. – CTMS updates payment status and retains a full audit trail.

Outcome: Finance operates in ERP; CTMS remains the operational source of truth for site payments.

3) CTMS → BI (self‑service analytics)

Goal: Feed operational KPIs (e.g., protocol deviations, site performance) into tools like Power BI for portfolio‑level dashboards.

How it works: – Authorized BI connectors pull CTMS data via web APIs on a schedule. – Row‑level filters and aggregation logic are maintained in BI.

Outcome: Teams blend CTMS metrics with corporate data to create a single analytics view.

Integration models we offer

Choose the model that fits your technical capacity and operating constraints. All three use the same security, validation, and audit principles.

- Managed API integration (turn‑key)

We design, build, and operate the connector using Flex API and your counterpart’s APIs. Best for sponsors/CROs who want a fully managed solution and predictable SLAs.

- Client‑built API integration (we enable Flex API, you build)

Your technical team builds the connector using Flex API endpoints to push/pull data. We provide API documentation, sandboxes, and guidance.

- Secure SFTP/CSV ingestion (batch)

When an external system lacks suitable APIs, we support structured CSV drops over SFTP. Flex processes files on a defined schedule. This is less preferred than API‑based approaches but valuable in constrained environments. Ideally, the SFTP server is client‑hosted.

ETL done right: design principles that keep data trustworthy

Extract

- Prefer event‑driven APIs/webhooks; fall back to scheduled polling when necessary.

- Use incremental extraction (timestamps, change tokens) to minimize load. – We work within system limits and volumes gracefully to keep both systems responsive.

Transform

- Keep relationships intact across study, country, site, subject, visit and AE data.

- Normalize codes & statuses (e.g., source → canonical mapping for subject and visit states). – Handle time zones and effective‑dated updates correctly.

- Validate types and ranges (dates, numerics, controlled terms) with actionable error messages.

Load

- Use reliable update logic keyed by natural or surrogate IDs.

- Guarantee atomicity per logical unit (e.g., subject with child visits).

- Maintain full audit trail (who/what/when/before‑after) to support GxP processes.

Quality & Observability

- Pre‑load schema checks and business rules (e.g., subject must belong to a known site; visit dates must be within window).

- End‑to‑end monitoring & alerting (pipeline health, latency, error budgets).

- Reconciliation views to compare source vs. target counts and detect drift.

What we map (at a glance)

- We align the core operational entities across your study systems

- Subjects, Visits, Adverse Events, Sites, and Payments – using your study configuration and business rules. The exact field‑to‑field mapping is agreed during design and documented for audit and change control.

Deniz Erk – Senior Analyst

Deniz Erk is a product development professional with an engineering background and eight years of experience spanning IT business analysis and strategic management roles. Over the past two years, Deniz has focused on the clinical trials industry, bringing a holistic, solution-oriented approach to the development of innovative CTMS products.